30. May 2023 By Yelle Lieder

Sustainability of programming languages: Java rather than TypeScript?

The sustainability and efficiency of technologies appear inseparable at first glance. Ultimately, increasing efficiency can save resources and electricity and thereby reduce environmental impacts. Following this narrative, in the context of digital sustainability, a study examining the energy efficiency of programming languages is often cited. But are efficient programming languages really more sustainable?

What are efficient programming languages really about?

The 2017 paper ‘Energy Efficiency across Programming Languages’ by Pereira et al. attempts to provide an answer to this. It examines programming languages for speed, energy consumption and memory usage. To do so, toy programs from the Computer Language Benchmarks Game were executed and measured at runtime.

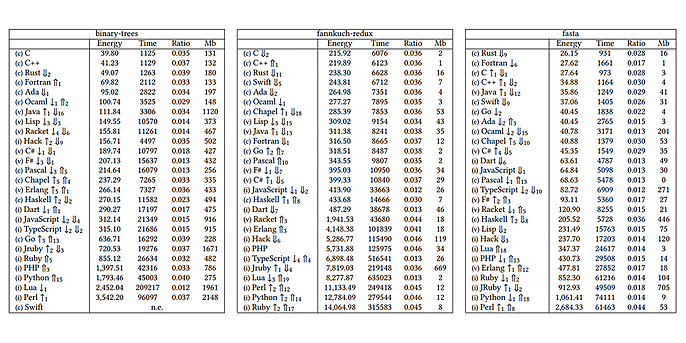

The results are hardly surprising. The paper states ‘it is common knowledge that these top 3 languages (C, C++ and Rust) are known to be heavily optimised and efficient for execution performance, as our data also shows’. The complete ranking can be seen in figure 1.

Prüfstand der Ergebnisse aus drei Testszenarien, Quelle: Pereira, R., Couto, M., Ribeiro, F., Rua, R., Cunha, J., Fernandes, J.P., Saraiva, J. (2017): Energy efficiency across programming languages: how do energy, time, and memory relate?

Does it make sense to use energy efficiency at runtime as a metric?

Let us start with the obvious finding, which the authors themselves repeatedly emphasise. There is no linear relationship between execution time and energy consumption. So code that is executed twice as fast does not always consume half as much energy. The answer to one of the research questions ‘Is the faster language always the most energy efficient?’ is therefore clear: ‘No, a faster language is not always the most energy efficient’. In an interview with one of the authors, the results are once again put into context.

The authors of the toy programs used also write ‘We are deeply disinterested in the claim that the measurements of a few small programs define the relative performance of programming languages [...]’. This refers to the fact that the programs are not comparable with software that is used productively and often have completely different requirements profiles and that the results are therefore not transferable. There are important characteristics of languages that are not sufficiently accounted for in such a test. Running algorithms is one thing, but applying them in complex, distributed systems with numerous data transfers, asynchronous processes and access to long-term storage is something completely different.

Other aspects that need to be considered more discriminatingly include:

- Compilation and interpretation of source code into binary code: For example, the results of the paper do not include the energy required to compile Java code or to compile TypeScript before executing it in JavaScript. Although these additional steps do not occur in all cases, they must be taken into account in order to make a fair comparison.

- Portability: If an application in C has to be adapted in order for it to be able run on another system, this conversion again costs resources, for instance, on developers’ computers and in deployment pipelines.

- Debugging: Briefly testing changes at runtime in Flask Debug Mode requires less time and resources than compiling an entire Rust project, including all crates, before the bug fix can be checked. In addition, maintainability and readability have an influence on how many resources need to be invested in code adaptations. Debugging in the different languages can therefore also cost different amounts of working time and thus resources and energy.

Using the table as the sole basis of orientation is therefore misleading; instead, developers must always orient themselves based on the entire life cycle and the use case of the software to be developed.

What about framework performance?

The second comparison that is often cited is Web Framework Benchmarks. These include tasks such as JSON serialisation, database access and server-side template creation and compare performance, latency and overhead – but not energy efficiency. They make more realistic and more practical comparisons and test different combinations of tasks. The results of testing individual languages look very different compared to the results of comparing frameworks. For example, in the ‘Fortunes’ test scenario, the NodeJS framework (JavaScript) performs better than Spring (Java). With Django (Python) and Rails (Ruby), the situation is similar. Node and Spring of course cannot be easily substituted, but the comparison shows that for certain settings, ‘less efficient languages’ can also be a good choice.

This comparison also leaves some of the questions addressed above unanswered. Every productive system has different runtime requirements and uses functionalities of the frameworks either more, less or not at all. Once again, the entire development cycle is not taken into account here either. Making a decision for or against technologies solely based on the energy efficiency benchmarks at runtime is too short-sighted. Doing so would entail the need to discuss whether object-oriented programming should be abandoned in favour of sustainability and closely coupled monoliths should be developed instead – because research shows that procedurally programmed monoliths (not easy to maintain, scale and so on) generate less overhead at runtime than object-oriented modular systems. The discussion can also be extended to open-source and low or no-code, but that is beyond the scope of this post.

How relevant is the efficiency of programming languages?

At scientific conferences and in expert committees in the field of sustainable IT, the efficiency of individual technologies plays a minor role. Although the focus of discussions occasionally drifts way too far into comparing the efficiency of algorithms or web technologies, overall, the new publications on the matter make up but a small proportion of what is available. The focus is on the efficient use of technologies, the avoidance of waste, hardware, energy sources or the economical use of computing power. But why is efficiency not more relevant?

- Maturity level: Software efficiency is a topic that has been very well understood for a long time. We know from benchmarks which technologies are efficient and to what degree. And time has shown that, due to the scarce availability of computing power, we have had good experience with how to develop high-performance software. So we are simply much further ahead when it comes to efficiency than, for example, the responsible use of IT hardware or the use of renewable energies.

- Efficiency: Compared to measures such using renewable energies or extending the service life of hardware, improving the efficiency of individual software technologies is often only effective to a marginal degree. According to the principle of Pareto efficiency, the first thing to do is concentrate on the possible courses of action that entail high efficiency and low investment costs before tackling costly, minimally effective optimisations in the area of efficiency.

- Conflicting goals: Increasing technological efficiency leads to the technologies being used more often and more intensively because the costs of using them are lower or using them is simply more convenient – because it is faster. This conflict between goals is also referred to as the rebound effect. So efficiency does not always automatically lead to more sustainability.

- Comparability: Only a few software technologies can be directly compared with one another. The two mentioned in the title of this blog post have different characteristics, functions, ecosystems and are optimised for different problems, which is why a choice between these two specific technologies rarely has to be made. A list that only considers runtime aspects of difficult-to-compare technologies should therefore not be the only thing used when deciding which technology to choose. What is more helpful is the comparison of specific characteristics depending on the use case. The RealWorld example apps, which can be used to test combinations of technologies, can provide some assistance here. However, in order to get the best out of each technology, it is even more expedient to compare different configurations of the same technology, for instance, a customised configuration versus the default configuration.

What really matters

Focusing on efficiency alone is misleading. As long as we do not think about where the electricity comes from and how much hardware we load with our software, the potential for improvement based purely on the efficiency of individual technologies is too small. The discussion about programming languages distracts us from the real issues (e-waste, waste of computing power, energy sources). Moreover, we have to stop using ‘sustainability’ and ‘efficiency’ synonymously.

What we need to do instead is look at all of the lifecycle costs concerning digital solutions, from hardware for development and operation to requirements, concept and design, the choice of technology and development, all the way to operation and maintenance. There is also still far too little research that has been done on the software development processes – that is, the influence of work equipment, travel, test environments and pipelines. One-dimensional analyses therefore do not bring us any closer to achieving sustainability goals.

You can find more exciting topics from the world of adesso in our latest blog posts.